Hey there, sometimes I see people say that AI art is stealing real artists’ work, but I also saw someone say that AI doesn’t steal anything, does anyone know for sure? Also here’s a twitter thread by Marxist twitter user ‘Professional hog groomer’ talking about AI art: https://x.com/bidetmarxman/status/1905354832774324356

AI and so many other pointless online discourseTM that can summarized as “does X suck/should X be abolished/will X exist under socialism” follow two basic sides of an argument:

-

X only sucks because of capitalism and under socialism, X will actually be good for society.

-

X will undergo such qualitative change under socialism that it is no longer X but Y.

All AI discourseTM follow this basic pattern. On one side, you have people like bidetmarxman who argue that AI only sucks because capitalism sucks and on the other side, you have people say that AI sucks while also saying that the various algorithms and technologies that are present in useful automation doesn’t count as AI but is something different.

The way to not fall into the trap is to ask these simple questions:

-

Does X exists in AES?

-

What is AES’s relationship with X?

If we try to apply this to AI in general, the answers are very simple. AI is not only pushed by the Chinese state, but it’s already very much part of Chinese society where even average people benefit from things like self-driving buses. China is even incorporating AI within its educational curriculum. This makes sense since people are going to use it anyways, so might as well educate them on proper use and the pitfalls of misuse.

The question of AI art within China is far murkier. There seems to be some hesitation. For example, there was a recent law passed that stated AI art must be labeled as such. I don’t think they would make an effort to enforce disclosure of AI art being AI art if it were so innocent.

The question of AI art within China is far murkier. There seems to be some hesitation. For example, there was a recent law passed that stated AI art must be labeled as such. I don’t think they would make an effort to enforce disclosure of AI art being AI art if it were so innocent.

Greatly put, I was trying to articulate this view point earlier in this thread and I think this nails my general feelings about it - AI art needs to have distinctions as such, as the potential for harm by passing them off as real is massive.

-

A lot of computer algorithms are inspired by nature. Sometimes when we can’t figure out a problem, we look and see how nature solves it and that inspires new algorithms to solve those problems. One problem computer scientists struggled with for a long time is tasks that are very simple to humans but very complex for computers, such as simply converting spoken works into written text. Everyone’s voice is different, and even those same people may speak in different tones, they may have different background audio, different microphone quality, etc. There are so many variables that writing a giant program to account for them all with a bunch of IF/ELSE statements in computer code is just impossible.

Computer scientists recognized that computers are very rigid logical machines that computer instructions serially like stepping through a logical proof, but brains are very decentralized and massively parallelized computers that process everything simulateously through a network of neurons, whereby its “programming” is determined by the strength of the neural connections between the neurons, that are analogue and not digital and only produce approximate solutions and aren’t as rigorous as a traditional computer.

This led to the birth of the artificial neural network. This is a mathematical construct that describes a system with neurons and configurable strengths of all its neural connections, and from that mathematicians and computer scientists figured out ways that such a neural network could also be “trained,” i.e. to configure its neural pathways automatically to be able to “learn” new things. Since it is mathematical, it is hardware-independent. You could build dedicated hardware to implement it, a silicon brain if you will, but you could also simulate it on a traditional computer in software.

Computer scientists quickly found that applying this construct to problems like speech recognition, they could supply the neural network tons of audio samples and their transcribed text and the neural network would automatically find patterns in it and generalize from it, and when new brand audio is recorded it could transcribe it on its own. Suddenly, problems that at first seemed unsolvable became very solvable, and it started to be implemented in many places, such as language translation software also is based on artificial neural networks.

Recently, people have figured out this same technology can be used to produce digital images. You feed a neural network a huge dataset of images and associated tags that describe it, and it will learn to generalize patterns to associate the images and the tags. Depending upon how you train it, this can go both ways. There are img2txt models called vision models that can look at an image and tell you in written text what the image contains. There are also txt2img models which you can feed it a description of an image and it will generate and image based upon it.

All the technology is ultimately the same between text-to-speech, voice recognition, translation software, vision models, image generators, LLMs (which are txt2txt), etc. They are all fundamentally doing the same thing, just taking a neural network with a large dataset of inputs and outputs and training the neural network so it generalizes patterns from it and thus can produce appropriate responses from brand new data.

A common misconception about AI is that it has access to a giant database and the outputs it produces are just stitched together from that database, kind of like a collage. However, that’s not the case. The neural network is always trained with far more data that can only possibly hope to fit inside the neural network, so it is impossible for it to remember its entire training data (if it could, this would lead to a phenomena known as overfitting which would render it nonfunctional). What actually ends up “distilled” in the neural network is just a big file called the “weights” file which is a list of all the neural connections and their associated strengths.

When the AI model is shipped, it is not shipped with the original dataset and it is impossible for it to reproduce the whole original dataset. All it can reproduce is what it “learned” during the training process.

When the AI produces something, it first has an “input” layer of neurons kind of like sensory neurons, such as, that input may be the text prompt, may be image input, or something else. It then propagates that information through the network, and when it reaches the end, that end set of neurons are “output” layers of neurons which are kind of like motor neurons that are associated with some action, lot plotting a pixel with a particular color value, or writing a specific character.

There is a feature called “temperature” that injects random noise into this “thinking” process, that way if you run the algorithm many times, you will get different results with the same prompt because its thinking is nondeterministic.

Would we call this process of learning “theft”? I think it’s weird to say it is “theft,” personally, it is directly inspired by biological systems learn, of course with some differences to make it more suited to run on a computer but the very broad principle of neural computation is the same. I can look at a bunch of examples on the internet and learn to do something, such as look at a bunch of photos to use as reference to learn to draw. Am I “stealing” those photos when I then draw an original picture of my own? People who claim AI is “stealing” either don’t understand how the technology works or just reach to the moon claiming things like it doesn’t have a soul or whatever so it doesn’t count, or just pointing to differences between AI and humans which are indeed different but aren’t relevant differences.

Of course, this only applies to companies that scrape data that really are just posted publicly so everyone can freely look at, like on Twitter or something. Some companies have been caught scraping data illegally that were never put anywhere publicly, like Meta who got in trouble for scraping libgen, which a lot of stuff on libgen is supposed to be behind a paywall. However, the law already protects people who get their paywalled data illegally scraped as Meta is being sued over this, so it’s already on the side of the content creator here.

Even then, I still wouldn’t consider it “theft.” Theft is when you take something from someone which deprives them of using it. In that case it would be piracy, when you copy someone’s intellectual property for your own use without their permission, but ultimately it doesn’t deprive the original person of the use of it. At best you can say in some cases AI art, and AI technology in general, can based on piracy. But this is definitely not a universal statement. And personally I don’t even like IP laws so I’m not exactly the most anti-piracy person out there lol

I don’t wanna get too deep into the weeds of the AI debate because I frankly have a knee jerk dislike for AI but from what I can skim from hog groomer’s take I agree with their sentiment. A lot of the anti-AI sentiment is based on longing for an idyllic utopia where a cottage industry of creatives exist protected from technological advancements. I think this is an understandable reaction to big tech trying to cause mass unemployment and climate catastrophe for a dollar while bringing down the average level of creative work. But stuff like this prevents sincerely considering if and how AI can be used as tooling by honest creatives to make their work easier or faster or better. This kind of nuance as of now has no place in the mainstream because the mainstream has been poisoned by a multi-billion dollar flood of marketing material from big tech consisting mostly of lies and deception.

well…in my experience, one side (people who draws good or bad and live making porn commissions mostly) complain that AI art is stealing their monies and produce “soulless slop”

and the other side (gooners without money and techbros) argue that this is the future of eternal pleasure making lewd pics of big breasted women without dealing with artistic divas, paying money or “wokeness”

Ok. Let’s be real here. How many of you defending AI art have used it to make porn? Be with honest with yourselves. Could something like that be clouding your views of it?

I know someone who was better able to process childhood trauma with the help of AI-assisted writing. I will let that speak for itself.

Glad that helped them, and it was probably a hell of a lot cheaper than a psychologist would’ve been, but we aren’t talking about a chatbot, we’re talking about AI generated art, using the colloquial meaning of “pretty pictures”

The so-called defenses of “AI art” in this thread seem to have been mostly about generative AI as a whole, so text is included under that umbrella, as far as I’m concerned. Also, for all the annoying (in my view) trend of calling AI pictures “AI art”, writing is often considered an artform too, so…

Anyway, I don’t really have time to get into a long thing right now (or at least, my version of long), but the point of my comment was “here’s something that is actually happening with a real person who uses AI” instead of projections of motives onto people that ignore the content of what has been said so far in this thread. Generative AI is one area where I can confidently say I am probably way more familiar with it than most people and this implication of “clouded views” is a conversation-ender kind of comment, not something that clarifies anything.

I posted this comment in a pretty snide way, that’s for sure. but I think it does sum up a lot of my views of AI art well. A lot of defenders of it aren’t looking for genuine use cases, they’re just demanding unlimited access to the treat machine, and that’s sad to see in a space like this.

If you’ve read my other comments on AI pictures specifically and would like to discuss what I have said in them, then sure, but if I’m coming across as defensive, it’s because of the sheer amount of people here who have presupposed what anti-AI picture people believe, if people are going to be talking past me, I’m going to be making snide comments about them. I do think a lot of people are becoming addicted to the treat generators, and as such, will rationalise away their addiction and start accusations against people who “want to take their treats away.” without really examining whether this, as it currently exists, is actually good for society. A lot of them seem to be presuppose a kind of “platonic ideal” of AI art that just brings joy to people, rather than the capitalist treat machine it is currently being used for.

(Fair warning, I have time to do a long thing now… bear me, or don’t, up to you.)

I’d have to go find those other comments of yours, but for the moment, I will say, I kind of get it. I do remember seeing at least one comment that was sniping at anti-AI views and being uncharitable about it, and I kinda tried to just skate past that aspect of it and focus on my own read of the situation, but I probably should have addressed it because it was a kind of provocation in its own way.

But yeah, I can get defensive on this subject myself because of how often anything nuanced gets thrown out. Personally, I’ve put a lot of thought into what way and how I use generative AI and for what reasons, and one of my limits is I don’t share AI-generated images beyond a very limited outlet (I’m not sharing them on the wider internet, on websites where artists share things). Another is that I don’t use AI-generated text in things I would publish and only use it for my own development, whether that’s development as a writer or like a chatbot to talk about things, etc.

Can they be “treat generators” in a way? Yeah, I guess that’s one facet of them. But so is high speed internet in general. It’s already been the case before generative AI kicked into high gear that people can find novel stimuli online at a rate they can’t possibly “use up” all of or run out of fully because of the rate at which new stuff is being produced. The main difference in that regard is generative AI is more customizable and personal. But point is, it’s not as though it’s the only source of “easy treats”. Probably the most apt comparison to it in that way is high speed internet itself along with the endless churn of “new content”.

Furthermore, part of the reason I chose the example I did of use in my previous post is that while, yes, there are people who use generative AI for porn, or “smut” as some would call it in the case of text generation, the way you posed your post, there was essentially no way to respond to it directly without walking into a setup that makes the responder look bad. If the person says no, I don’t use it for that, you could just say, “Well I meant the people who do and I’m sure some do.” And if the person says yes, I do use it for that, you can say, “Hah, got you! That is what your position boils down to and now I’m going to shame you for use of pornography.” It also carries an implication that that one specific use would cloud someone’s judgment and other uses wouldn’t, which makes it sound like a judgment specifically about pornography that has nothing to do with AI, which is a whole other can of worms topic in itself and especially becomes a can of worms when we’re talking about “porn” that involves no real people vs. when it does (the 2nd one being where the most intense and justifiable opposition to porn usually is).

Phew. Anyway, I just wish people on either end of it would do less sniping and more investigating. They don’t have to change their views drastically as a result. Just actually working out what is going on instead of doing rude guesses would go a long way. Or at the very least, when making estimations, doing it from a standpoint of assuming relatively charitable motives instead of presenting people in a negative light.

You’re absolutely right that this has just largely devolved into people snapping past each other and not really listening to each other. I made my comment above because it felt like this place was behaving more like reddit or twitter than Lemmygrad, over AI of all things, and as AI is a personal threat to my livelihood, I probably took that a lot more personally than I needed to. I felt like I was being chased off the platform by an imagined army of treat obsessed AI techbros. I assumed that Lemmygrad in general held a very position on AI, more similar to my own, and it was quite a shock. But it’s not helpful to just insult people for disagreeing with me.

While I was “snapping back” in retaliation, I wasn’t really contributing anything substance with this and certainly wasn’t contributing productively. It was more of a parting shot than anything else, just frustrated with what I saw as people basically ignoring the potential problems of this technology in favour of being ok with consuming more capitalist owned treats. But I would rather have a proper discussion of the technology and its implications than just catty back and forth insults. I do think this technology is going to do more harm than good to society overall, but that doesn’t mean it doesn’t have benefits.

Like any tool, it depends on how it is used. I just felt a lot of people were acting as if this was being implemented in a utopian socialist society, where discussion of how the capitalist class will use this to manipulate and control the people was being ignored. It really didn’t help that most of the pro-AI picture arguments involved some comment about how IP law is bad. Like yeah, it is, but we live in a capitalist system that uses it, and big corporations getting to ignore IP law isn’t a good thing, it already exists for their benefit against the people, so people basically just using a strawman argument that people only care about their art “IP” or whatever felt very disingenuous, very “twitter debate bro” even. And if you’ve seen how I act when a twitter debate bro wanders in here, I think it’s pretty easy to see why I made the comment above, when I felt like I was being surrounded by them. I got too defensive, and was ultimately stifling discussion because I didn’t like how I felt the discussion was being stifled.

Obviously this problem didn’t start with AI, and the internet algorithms before this basically created the conditions for AI to be used in the way it is largely used by people, a treat machine instead of a purpose built tool, as people are already used to instant gratification. Which I suppose overall is what I’m really upset about. But this isn’t new, as you said, the internet and even television before it have basically trained people to always want new “treats” to consume, instead of more productive and self-actualising hobbies and pastimes.

Since you used a personal example, I might use one too. A friend of mine really, really struggled in his 20s. He had some productive hobbies as a teen, but stopped doing them once he became an adult. He would always end up going down the “path of least resistance” with regards to his free time, which usually involved just watching TV, playing video games, and drinking. His depression became far worse as a result. He felt like he had nothing to live for, because quite frankly, he didn’t. It wasn’t just the depression talking, he genuinely had nothing of substance to look forward too in his life. It was just an endless cycle of work and consumption. It wasn’t until he started getting back into his old physical hobbies, where he was actually doing something, improving a skill, that he started to improve.

Now this isn’t a rant against consumer entertainment, so much as it is me trying to say that spending one’s time only ever consuming, instead of creating or improving, will completely wreck a person. I know the usual argument against this is “but I’m too tired to do anything else.” but people are tired because they get caught in this cycle where they do nothing but sell their labour and then spend their wages on consuming things. I know a lot hobbies under capitalism have very expensive barriers for entry, but you know what doesn’t? Art. All you need is paper, pencils and erasers.

So to bring this ramble back to the topic at hand, AI pictures are the consumer focused replacement for art itself, one of the few easy “just pick up the tools and do it” hobbies there is. Instead of someone doodling pictures for fun or to de-stress, they can type in a prompt instead. It turns what is an act that requires some effort, some self reflection, some struggle, into what is essentially a slot machine, you type in the prompt, hoping to get a “good enough” version of what you asked for. It looks “better” than anything an amateur could make themselves, so a lot of people would much rather do this than struggle and work hard at developing a skill, especially after a long, soul crushing day at work. But this leads to a negative spiral for people, in my opinion. I want to see people become the best version of themselves they can be, not necessarily through art, but through active improvement of themselves. And a quick easy shortcut like AI pictures means that they’ll never take that first step towards actually learning a skill.

TL;DR on my previous comments: I think the AI picture industry (and potentially the AI industry in general) is predatory and addicting, like gambling. I don’t think the technology itself is the problem, but I do think it is going to be used to further social control of the masses and destroy what little sense of self and community people have left under capitalism. I do think it has major potential to be incredibly addictive, to go back to my snide comment, very much like how the porn industry can be addictive. I’ve likened it to a treat generator a lot, and in many ways, it functions like a skinner box, it doesn’t give you exactly what you want every time, you have to keep pushing the button over and over until it does. Just like a slot machine. the way these programs work is built to cause and exacerbate addiction. Right now a lot of these AI picture programs are free, or have “small” fees, but that’s how literally every business starts, they charge as little as possible to undercut the competition, then once they get a monopoly or close enough, they jack up the prices. I’ve felt like a lot of the AI art defenders in this thread have completely ignored this aspect of it, which I think is the one we as socialists, should be most concerned about. Not that the technology itself is inherently bad, but how it will be used under capitalism to control and manipulate people.

Thanks for taking the time to write a thoughtful and nuanced response about it. I don’t think I disagree with any of the concerns you brought up, tbh. They all seem like valid things to be concerned about with AI. You might be surprised to know that I know of one person who was in AI dev for years as a UX designer, including for image generation, who also had strong opinions about the value of doing creative work “by hand” (e.g. not automating it) for development, for expression, etc. May sound like a contradiction that he was like that while also working in AI, but it’s interesting to me because it helps show that not everybody who has worked in AI is a full-on tech-bro. Of course, you could argue the end result is the same, that he contributed to enabling the tech and all its consequences, but still, I find it interesting.

I do think active building/learning instead of just consuming is important. It’s a bit murky sometimes, I think, when considering things like video games, where you do have to learn, but you are also learning something that often has no application to RL. With image gen, there is learning to prompt, however minor that is, and some people do mix it with actual drawing, but overall yeah, you could low effort type in prompts and press button until you get results you want. And to make an analogy to food, that is probably more like eating sugar than nutrients. Unlike with food, you won’t get diabetes from chronically overdoing it, but you might find yourself dependent on the “hit” of the novelty and have a hard time removing it from your life. The design of image gen is very similar to gacha, even if not intentionally.

That said, in terms of self improvement as a whole and a sense of fulfillment, I’m not convinced that hobbies like drawing are a great answer in and of themselves, but they are notably better than pressing button and adjusting prompt. Where my mind goes there is, we’re looking at broader societal problems, where people consciously or unconsciously notice that the society they live in is sort of nihilistic and exploitative, and that can be depressing in itself. Individualist development of hobbies may be more of a liberal solution overall than a revolutionary one. I think we can do better, though in the interim, again the individualist solution is probably better than no solution. Similar to how chatbots can be helpful for some people for processing things, but they are no long-term solution to alienation and isolation. So there’s the harm reduction perspective on it and what form that can take, and then there’s what to aim for. I think in terms of what to aim for, we need community rebuilt and communal things for people to do more so (that don’t have big cost barriers).

The messaging from the anti-generative-AI people is very confused and self-contradictory. They have legitimate concerns, but when the people who say “AI art is trash, it’s not even art” also say “AI art is stealing our jobs”…what?

I think the “AI art is trash” part is wrong. And it’s just a matter of time before its shortcomings (aesthetic consistency, ability to express complexity etc) are overcome.

The push against developing the technology is misdirected effort, as it always is with liberals. It’s just delaying the inevitable. Collective effort should be aimed at affecting who has control of the technology, so that the bourgeoisie can’t use it to impoverish artists even more than they already have. But that understanding is never going to take root in the West because the working class there have been generationally groomed by their bourgeois masters to be slave-brained forever losers.

A mechanical arm in a factory back in the 80s wasn’t as effective a worker as a person, it was however, much cheaper to run than hiring a worker there. Something doesn’t need to be a perfect replacement for it to replace workers.

Funny how so many alleged “socialists” stop caring about workers losing their jobs and bargaining power the instant the capitalists try to replace them with a funny treat machine.

Last I checked, Marxists are not opposed to automation and development of productive forces.

It’s a disruptive new technology that disrupt an industry that already has trouble giving a living to people in the western world.

The reaction is warranted but it’s now a fact of life. It just show how stupid our value system is and most liberal have trouble reconciling that their hardship is due to their value and economic system.

It’s just another mean of automation and should be seized by the experts to gain more bargaining power, instead they fear it and bemoan reality.

So nothing new under the sun…

It’s a disruptive new technology that disrupt an industry that already has trouble giving a living to people in the western world.

Yes, and the solution to the new trouble is exactly the same as the solution to the old trouble, but good luck trying to tell that to liberals when they have a new tree to bark up.

I tried but they are so far into thinking that communism does not work …

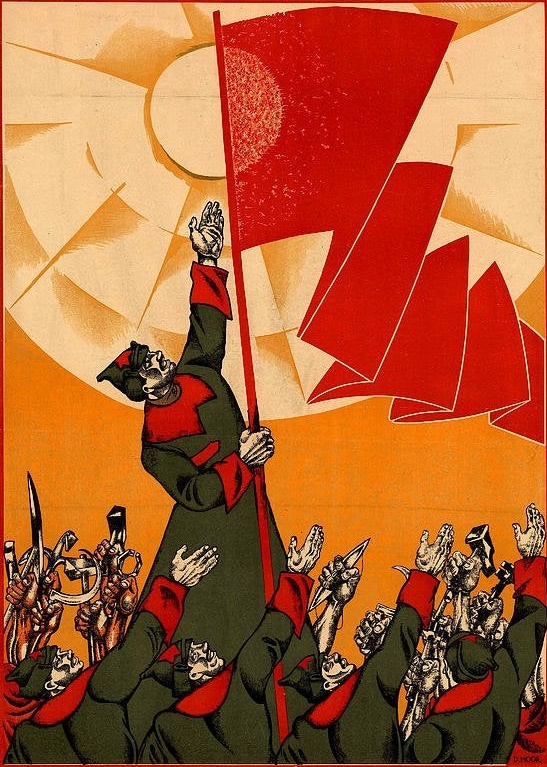

it’s basically this

I would argue that generated images that are indistinguishable from human art would require an AI use disclosure. The difference between computer-generated images and human art is that computers do not know why they draw what they draw. Meanwhile, every decision made by a human artist is intentional. There is where I draw the line. Computer-generated images don’t have intricate meaning, human-created art often does.

every decision made by a human artist is intentional

the weird perspective in my work isn’t an artistic choice, i just suck at perspective lol

Yes but you intentionally suck, otherwise you would just train for thousands more hours. Or be born with more talent. /s

I don’t really see how a human curating an image generated by AI is fundamentally different from a photographer capturing an interesting scene. In both cases, the skill is in being able to identify an image that’s interesting in some way. I see AI as simply a tool that an artist can use to convey meaning to others. Whether the image is generated by AI or any other method, what ultimately matters is that it conveys something to the viewer. If a particular image evokes an emotion or an idea, then I don’t think it matters how it was produced. We also often don’t know what the artist was thinking when they created an image, and often end up projecting our own ideas onto it that may have nothing to do with the original meaning the artist intended.

I’d further argue that the fact that it is very easy to produce a high fidelity images with AI makes it that much more difficult to actually make something that’s genuinely interesting or appealing. When generative models first appeared, everybody was really impressed with being able to make good looking pictures from a prompt. Then people quickly got bored because all these images end up looking very generic. Now that the novelty is gone, it’s actually tricky to make an AI generated image that isn’t boring. It’s kind of a similar phenomenon that we saw happen with computer game graphics. Up to a certain point people were impressed by graphics becoming more realistic, but eventually it just stopped being important.

Kind of unrelated but if you are to start to learn about AI today, how would you do it regarding helping with programming (generating images too as side objective) ?

Having checking the news for quite sometimes, I see AI is here to stay, not as something super amazing but a useful tool. So i guess it’s time to adapt or be left behind.

For programming, I find DeepSeek works pretty well. You can kind of treat it like personalized StackOverflow. If you have a beefy enough machine you can run models locally. For text based LLMs, ollama is the easiest way to run them and you can connect a frontend to it, there even plugins for vscode like continue that can work with a local model. For image generation, stable-diffusion-webui is pretty straight forward, comfyui has a bit of a learning curve, but is far more flexible.

Thank you, I’ll check them out.

It can be frustrating sometimes. I’ve encountered people online before who I otherwise respected in their takes on things and then they would go viciously anti-AI in a very simplistic way and, having followed the subject in a lot of detail, engaging directly with services that use AI and people who use those services, and trying to discern what makes sense as a stance to have and why, it would feel very shallow and knee-jerk to me. I saw for example how with one AI service, Replika, there were on the one hand people whose lives were changed for the better by it and on the other hand people whose lives were thrown for a loop (understatement of the century) when the company acted duplicitously and started filtering their model in a hamfisted way that made it act differently and reject people over things like a roleplayed hug. There’s more to that story, some of which I don’t remember in as much detail now because it happened over a year ago (maybe over two years ago? has it been that long?). But point is, I have seen directly people talk of how AI made a difference for them in some way. I’ve also seen people hurt by it, usually as an indirect result of a company’s poor handling of it as a service.

So there are the fears that surround it and then there is what is happening in the day to day, and those two things aren’t always the same. Part of the problem is the techbro hype can be so viciously pro-AI that it comes across as nothing more than a big scam, like NFTs. And people are not wrong to think the hype is overblown. They are not wrong to understand that AI is not a magic tool that is going to gain self-awareness and save us from ourselves. But it does do something and that something isn’t always a bad thing. And because it does do positive things for some people, some people are going to keep trying to use it, no matter how much it is stigmatized.

AI steals from other’s work and makes a slurry of what it thinks you want to see. It doesn’t elevate art, it doesnt further an idea, doesn’t ask you a question. It simply shows you pixels in an order it thinks you want based on patterns it’s company stole when they trained it.

The only use for AI “art” is for soulless advertising.

The privitisation of the technology is bad but not the technology itself. Labor should be socialised and to be against this is not marxist.

Properietorship is heavily baked into our modern cultures due to liberalism so you are going to hear a lot of bad takes such as “stealing” or moralism based on subjective quality on a given AI arts’ aesthetics even from people who consider themselves marxists and communists.

The advance of technology at the cost of an individual’s job is the fault of the organisation and allpcation of resources, ie capital, not the technology itself. Put it this way: people can be free to make art however they want to and their livelihood should not have to depend on it.

If you enjoyed baking but lamented the industrialisation and mechanisation of baking because it costed your livelihood and you said it was because the machines were stealing your methods and the taste of the products weren’t as good would we still consider it a marxist position? Of course not.

The correct takes could be found here:

- https://redsails.org/artisanal-intelligence/

- the above author’s website in general has some good articles https://polclarissou.com/boudoir/archive.html

If you’re a marxist, do not lament the weaver for the machine (Alice Malone): https://redsails.org/the-sentimental-criticism-of-capitalism/

Marxism is not workerism or producerism; both could lead to fascism.

Artisans being concerned about proleterisation as they effectively lose their labor aristocracy or path to petite-bourgoisie may attempt to protect their material perspectives and have reactionary takes. Again this obviously is not marxist.

TLDR - bidetmarxman is correct. I would argue lot of so-called socialists need self-reflection but like I said their view probably reflect their relative class positions and it is really hard to convince someone against their perceived personal material benefits.

most of the sources are literally just ‘some techbro in California made a twitter post about the AI slop they just made’

Marxism is not workerism or producerism; both could lead to fascism.

what do you mean?

Artisans being concerned about proleterisation as they effectively lose their labor aristocracy or path to petite-bourgoisie may attempt to protect their material perspectives and have reactionary takes. Again this obviously is not marxist.

I can assure you most artists are not making a living from art even before AI generative stuff undermined them.

I can assure you most artists are not making a living from art even before AI generative stuff undermined them.

Putting aside the presumptions imposed, please elaborate how AI is undermining them; it may reveal the relative class dynamics behind the reaction.

most of the sources are literally just ‘some techbro in California made a twitter post about the AI slop they just made’

Even if true, why does it matter?

what do you mean?

What do you mean?

On producerism: https://socialistmag.us/2024/01/07/producerism-socialism-and-anti-imperialism-for-fools/

On workerism: https://redsails.org/losurdo-and-gargani/

(Edited to elaborate for anyone else lurking)

Putting aside the presumptions imposed, please elaborate how AI is undermining them; it may reveal the relative class dynamics behind the reaction.

Its stealing their work and reproducing it without compensation or reference, its similar to how record labels would travel to tribal communities, record their music and then repackage it as ‘world music’ and make 10’s of millions without ever even naming the original artists that made the music to begin with.

AI models could in theory be used to cut down artists workload and such, but these are secondary effects to the actual utility they are made for and how they go about making what they do, which requires insane amounts of power. Its purpose is in practice to cut labor costs and remove workers entirely from an equation to make some dead end cultural feedback loop of continuing the ‘we must reproduce the green line going up at all costs’ based off of datasets and the whims of the upper class - it is most similar to the 1930s holywood censorship era with the means of production and datasets used to produce art in the modern age is gatekept to the religious zealots and upper class of the US as a means of decimating and producing the social fascist ideology thru the ideological state apparatuses.

The artists have been cut out of the equation entirely.

Its not as easy as a parallel between factory workers and more efficient modes of production making there be less of a need for workers to fill the roles; artistic expression has value that cant be expressed in simple reproduction because it is a mirror of the human experience, which isnt something an AI model can create because it cannot create a context where anyone is meant to give a shit about it - there is no heartbreaking works of staggering genius to be found behind a ML model because the ‘story’ behind something like that is ‘I put a prompt into a machine and it hallucinated a story’

This isnt the same product as an actual work of art, no matter how much software engineers in California might try to convince you otherwise, its a different product and should be understood as such.

This is just a defence of proprietorship, and romanticising anti-capitalism to “anti-corporatism”. These artisans who claim in these ways are just defending failing bourgoisie perspectives for themselves; they want a put up a walled garden around their skilled labor so effectively those unskilled do not have access to produce it for themselves, and claim authenticity because their defense of private property is at a smaller scale. It is a highly reactionary take. If this was not the case then the would frame the fight their loss of income against capital itself and not the technology.

Actually labor doesn’t have value and it’s a bourgeois construct to think it does

shitultrassay.jpg

I get that you are hurting under capital but if you keep this up chances are you are going to go down a rabbit hole of reaction or at least neuter your radicalism. Wish you all the best that you don’t get go down the wrong road, especially if you live in the imperial cores. For me Walter Rodney, Losurdo and Redsails.org helped a lot.

i don’t think it’s stealing; all current artists owe the work of artists who came before them

What a crazy conversation. “Art” and “machine made” is an oxymoron, that’s it. Without the human component, the techniques and experiences of an artist (however complex they might be), with no intent or thought behind it there is no reason for it to exist. This idea is one step removed from sitting in a plastic garden with drone birds flying around, reading your AI book and listening to AI music, while an AI robot raises your AI engineered children. This is death of the human condition, not technological progress.

Hes right, haven’t ever seen any AI art that I haven’t immediately thought ‘wow this fucking sucks’ no matter how many twitter posts redsails articles sources saying there AI slop book they made in a week can be sold for profit.

Just another way to be lazy

I don’t understand how every picture is supposed to be “art”. Art is subjective. To me only a Slammer or similar is art.

It’s a cultural thing for white people. Hell, I’d say that it’s one of the only things that’s truly white culture. When the word “art” gets used on something, it’s basically the same as anointing it. Any and every drawing, sculpture, text, video, and song is “art” by default. And some take it further to define everything made by a person as “art” too. Even though it’s vague as hell, it’s a deadly serious topic.

I’m not saying that other cultures don’t have similar ideas, but that it’s weird the way that white people universally agree that protecting “art”, whatever that is, is of the utmost importance.

It always bewildered me about english language that every creation is called “art”. In Polish art is “sztuka” which word have quite many meanings, but the most relevant is “a field of artistic activity distinguished by the aesthetic values it represents”, of which common understanding is that it have to actually represent some aesthetic values. This of course cause unending discussions about what is art and the subjectivity of it to the point of the adage “art is in the eye of beholder” became universally accepted.

I like to bring up the example of Hawaiian Hula dance for that kind of reason. It’s a case where a kind of “art” is inseparable from culture, heritage, passing down stories. Which is a lot different than simply doing what you feel like as art, as a form of “self expression.” Not that I’m judging “self expression” point of view as bad, but just adding to the notion that what is considered art and the associated importance of it, is not always the same across cultures.

Damn. What happened here? I think this is the most divisive topic I think I’ve seen on Lemmygrad.

To everyone reading this, please comment and downvote me if you would consider yourself “pro” AI art (art specifically, not LLMs in general) and comment and upvote me if you’re anti-AI art.

Please comment letting me know whether you upvoted or downvoted, and whether you create art yourself(doesn’t need to be professional, just as a hobby is fine) or if you actively work with artists, or even if you’ve ever had an interest in creating art, but ended up never really pursuing it as a hobby.

I’m interested to know where the opinions lie based on their proximity to “traditional” art vs people who have never had any real interest in the art. This impromptu “poll” will probably get 0 response, but I am curious.

AI art is stealing even less than piracy is. If copying a digital movie without paying for it isn’t stealing than how is generating a digital image based on thousands of digital images?

Intellectual property is a bad thing. It is a cornerstone of modern capitalism. The arguments for AI art being stealing all hinge on the false premise that intellectual property law is fair, equitable and just and not just a way for capitalists to maintain their monopolies on ideas.

Yes artists deserve to be paid a fair share for their efforts but only as much as everyone else. The issue is that artists are losing control of the means of production. Artists are rightly upset but this should bring them into solidarity with the working classes. Instead they want the working classes to rally to their cause. They want workers, who have had no control over the means of production for hundreds of years, to rise up and fight for the artists right to control their means of production. It’s neo-ludditism they are railing against machines for stealing their jobs instead of railing against the capitalists hoarding all the wealth. It’s individualism bordering on narcissism. It lacks class consciousness.

This topic can be a good entry point for agitation if you have a soft touch. It’s hard to sound like you are on the side of an artist who feels they are being stolen from and convincing them that it is actually not theft while explaining that the fear of losing their livelihood is real but it is a feeling that the entire working class has been battling with for centuries.

Artists visceral feelings about AI are very valuable because most of the working class has been desensitised to their lot in life. If artists were able to use their skills to remind the masses of this injustice it could go a long way to raising class consciousness. But since artists have been separated from the working class by their control of the means of production getting them to pivot can be hard like with any other petit bourgeoisie.

AI art is stealing even less than piracy is. If copying a digital movie without paying for it isn’t stealing than how is generating a digital image based on thousands of digital images?

Humans also do it all the time, going onboard with the IP mafia on AI it’s like if every even vaguely impressionist painting author needed to pay royalties to Claude Monet or if every conventional fantasy author had to do it for Tolkien, except Tolkien also got his inspirations from previous works so i guess whomever is the lawful inheritors of Snorri Sturlusson and Elias Lonnrot suddenly become very rich, except that they also compiled their works based on… and so on and on and on

Intellectual property is a bad thing.

Even if we ignore every other impact of IP, it was historically always used by publishing industry against the individual artist.

This is a bad take but I don’t have the energy to argue

It’s a multifaceted thing. I’m going to refer to it as image generation, or image gen, cause I find that’s more technically accurate that “art” and doesn’t imply some kind of connotation of artistic merit that isn’t earned.

Is it “stealing”? Image gen models have typically been trained on a huge amount of image data, in order for the model to learn concepts and be able to generalize. Whether because of the logistics of getting permission, a lack of desire to ask, or a fear that permission would not be given and the projects wouldn’t be able to get off the ground, I don’t know, but many AI models, image and text, have been trained in part on copyrighted material that they didn’t get permission to train on. This is usually where the accusation of stealing comes in, especially in cases where, for example, an image gen model can almost identically reproduce an artist’s style from start to finish.

On a technical level, the model is generally not going to be reproducing exact things exactly and don’t have any human-readable internal record of an exact thing, like you might find in a text file. They can imitate and if overtrained on something, they might produce it so similarly that it seems like a copy, but some people get confused and think this means models have a “database” of images in them (they don’t).

Now whether this changes anything as to “stealing” or not, I’m not taking a strong stance on here. If you consider it as something where makers of AI should be getting permission first, then obviously some are violating that. If you only consider it as something where it’s theft if an artist’s style can reproduced to the extent they aren’t needed to make stuff highly similar to what they make, some models are also going to be a problem in that way. But this is also getting into…

What’s it really about? I cannot speak concretely by the numbers, but my analysis of it is a lot of it boils down to anxiety over being replaced existentially and anxiety over being replaced economically. The second one largely seems to be a capitalism problem and didn’t start with AI, but has arguably by hypercharged by it. Where image gen is different is that it’s focused on generating an entire image from start to finish. This is different from tools like drawing a square in an image illustrator program where it can help you with components of drawing, but you’re still having to do most of the work. It means someone who understands little to nothing about the craft can prompt a model to make something roughly like what they want (if the model is good enough).

Naturally, this is a concern from the standpoint of ventures trying to either drastically reduce number of artists, or replace them entirely.

Then there is the existential part and this I think is a deeper question about generative AI that has no easy answer, but once again, is something art has been contending with for some time because of capitalism and now has to confront much more drastically in the face of AI. Art can be propaganda, it can be culture and passing down stories (Hula dance), or as is commonly said in the western context in my experience, it can be a form of self expression. Capitalism has long been watering down “art” into as much money-making formula as possible and not caring about the “emotive” stuff that matters to people. Generative AI is, so far, the peak of that trajectory. That’s not the say the only purpose of generative AI is to degrade or devalue art, but that it seems enabling of about as “meaningless content mill” as capitalism has been able to get so far.

It is, in other words, enabling of producing “content” that is increasingly removed from some kind of authentic human experience or messaging. What implications this can have, I’m not offering a concluding answer on. I do know one concern I’ve had and that I’ve seen some others voice, is in the cyclical nature of AI, that because it can only generalize so far beyond its dataset, it’s reproducing a particular snapshot of a culture at a particular point in time, which might make capitalistic feedback loop culture worse.

But I leave it at that for people to think about. It’s a subject I’ve been over a lot with a number of people and I think it is worth considering with nuance.