Everyone seems to be complaining about LLMs not actually being intelligent. Does anyone know if there are alternatives out there, either in theory or already made, that you would consider to be ‘more intelligent’ or have the potential to be more intelligent than LLMs?

Agreed with the points about intelligence definition, but on a pragmatic note, I’ll list some concrete examples of fields in AI that are not LLMs (I’ll leave it up to your judgement if they’re “more intelligent” or not):

- Machine learning, most of the concrete examples other people gave here were deep learning models. They’re used a lot, but certainly don’t represent all of AI. ML is essentially fitting a function by tuning the function’s parameters using data. Many sub-fields like uncertainty quantification, time-series forecasting, meta-learning, representation learning, surrogate modelling and emulation, etc.

- Optimisation, containing both gradient-based and black-box methods. These methods are about finding parameter values that maximise or minimise a function. Machine learning is also an optimisation problem, and is usually performed using gradient-based methods.

- Reinforcement learning, which often involves a deep neural network to estimate state values, but is itself a framework for assigning values to states, and learning the optimal policy to maximise reward. When you hear about agents, often they will be using RL.

- Formal methods for solving NP-hard problems, popular examples include TSP and SAT. Basically trying to solve these problems efficiently and with theoretical guarantees of accuracy. All of the hardware you use will have had its validity checked through this type of method at some point.

- Causal inference and discovery. Trying to identify causal relationships from observational data when random controlled trials are not feasible, using theoretical proofs to establish when we can and cannot interpret a statistical association as a causal relationship.

- Bayesian inference and learning theory methods, not quite ML but highly related. Using Bayesian statistical methods and often MCMC methods to perform statistical inference of the posterior with normally intractable marginal likelihoods. It’s mostly statistics with AI helping out to enable us to actually compute things.

- Robotics, not a field I know much about, but it’s about physical agents interacting with the real world, which comes with many additional challenges.

This list is by no means exhaustive, and there is often overlap between fields as they use each other’s solutions to advance their own state of the art, but I hope this helped for people who always hear that “AI is much more than LLMs” but don’t know what else is there. A common theme is that we use computational methods to answer questions, particularly those we couldn’t easily answer ourselves.

To me, what sets AI apart from the rest of computer science is that we don’t do “P” problems: if there is a method available to directly or analytically compute the solution, I usually wouldn’t call it AI. As a basic example, I don’t consider computing y = ax+b coefficients analytically as AI, but do consider general approximations of linear models using ML AI.

I presume you don’t want an answer from an LLM, but maybe somebody can give their two cents about the options below:

- CNN’s

- RL

- GNN’s

- GBDT

- Transformer Models

- Symbolic AI/Logic Engines

- VAE’s

They seem to be all specialized AI tools, so maybe doesn’t answer your question directly.

I think the answer might be a collection of specialized AI tools would be better than LLM’s

Thinking about it, a collection of federated specialized LLM’s would be great because you’re lot limited to a single tuning.

I mean, LLM are just next token in sequence predictors. Thats all they do. They take an input sequence, and based on that sequence, they predict the next thing to come. Its not magic.

Its just that embedded in language (apparently), there is information about meaning, at least as humans interpret it. But these methods can be applied to any kind of data, most of which doesn’t come prepackaged with some human interpretation of meaning.

Just to toot my own bugle, here is some output of a transformer model I’m building/ working on this very second (as in, its on my second monitor):

Here I’m using time series climate, terrain, and satellite data to predict the amount of carbon stored in soils over time. Its the same idea as an LLM, except that instead of trying to predict the next token embedding in sequence, I’m asking the model to predict the next “climate/ spectral/ terrain/ soil” state, in sequence.

Typical models in this domain maybe can predict at about a 0.35-4 R2. Here you can see I’m getting a 0.54 R2.

All ML as we currently know it is basically giant piles of linear algebra and an optimization function.

Edit:

Here is a bonus panel that I just made:

(pdsi: Palmer Drought Severity Index, basically wet season dry season.)

All fine and dandy. Do you have an answer to OPs question?

I think others are mostly addressing that issue, which is why I went a different direction.

Its not really an answerable question, because we don’t even have great definitions for human intelligence, let alone things that are only defined in abstract comparison to that concept.

Transformers, specifically the attention block, is just one step in a long and ongoing traditions of advancement in ML. Check out the paper “Attention is all you need”. It was a pretty big deal. So I expect AI development to continue as it has. Things had already improved substantially even without LLM’s but boy howdy, transformers were a big leap. There is much more advancement and focus than before. Maybe that will speed things up. But regardless, we should expect better models and architectures in the future.

Another way to think of this is scale. The energy density of these systems (I think) will become a limiting factor before anything else. This is not to say all of the components of these systems are of the same quality. A node in an transformer is of higher quality than that of a UNET. A worm synapse is of lower quality than a human synapse.

But, we can still compare the number of connections, not knowing if they are equal quality.

So a industry tier LLM has maybe 0.175 trillion connections. A human brain has about 100x that number of connections. If we believed the number of connections to be of equal quality, then LLM’s need to be able to be 100x larger to compete with humans (but we know that they are already better than most humans in many tests). Keep in mind, a mature tree could have well over 16 000–56 000 trillion connections via its plasmodesma.

A human brain takes ~20 watts resting power. An LLM takes about 80 watts per inquiry reference. So a LLM takes quite a bit more energy per connection to run. We’re running 100x the connections on 1/4th the power. We would need to see an 800% improvement in LLM’s to be energy equivalent to humans (again, under the assumption of same “quality” of connection).

So we might see a physical limit to how intelligent an LLM can run so long as its running on silicon doped chip architecture. Proximity matters, alot, for the machines we use to run LLM’s. We can’t necessarily just add more processors and get smarter machines. They need to be smaller, and more energy efficient.

This is an approximation of moores law, and I’ve mapped on the physical limits of silicon:

So we really “cant” in big wavy parens, get close to what we would need to get the same computational density we see in humans. Then you have plants, where literally their energy is use is negative, and they have orders of magnitude more connections per kg than either silicon or human brain tissue are capable of.

So now we get to the question “Are all connections created equal?”, which I think we can pretty easily answer: No. The examples I gave and many, many more.

We will see architectural improvements to current ML approaches.

This is all good info, thanks.

I just have one minor nitpick:

An LLM takes about 80 watts per inquiry reference. So a LLM takes quite a bit more energy per connection to run. We’re running 100x the connections on 1/4th the power.

The math is wrong here. At “rest”, the brain is still doing work. What you call “an inquiry reference”, is just one LLM operation. I’m sure the human brain is doing much more than that. A human being is thinking “what should I have for dinner?” “What should have I said to Gwen instead?” “That ceiling needs some paint” “My back hurts a bit”. That clang you heard earlier in the distance? You didn’t pay attention to it at the time, but your brain surely did. Let’s not begin with the body functions that the brain, yes, the brain, must manage.

So an LLM is much, much, much more resource intensive than that claim.

I mean, given that LLMs aren’t intelligent, I guess you could include video game NPCs. They are often referred to as AI but are also not anything remotely close to true AI the same way LLMs aren’t close to true AI.

I would propose calling such “AI” an “AIM;” Artificially Intelligent Mimickry. Because they mimic intelligence without actually possessing it.

The “artificial” in AI appears to be losing its meaning the same way that “literally” is losing its original meaning.

Artificial mean something made or produced by human beings rather than occurring naturally. It doesn’t mean the artificially created thing isn’t whatever it claims to be.

An artificially created diamond is still a diamond.

Artificial intelligence should, therefore, be intelligent.

What would need to change about LLMs to make them closer to ‘true AI’ or even real intelligence? Or do you think there needs to be a different approach altogether?

‘true AI’ and ‘real intelligence’ don’t have any extrinsic meaning.

If you can’t tell its not a human, is that good enough? I mean we would have to dumb the current systems down to fool people these days. Then you ask them something off base and they get it completely wrong. Then you ask someone a question and they get it completely wrong.

Without a useful and static definition of intelligence, its not really an answerable question.

To answer this sort of question, you first have to ask how one quantifies intelligence in an unbiased and rigorous way.

For instance, can an LLM be considered intelligent if it speaks like a person but can’t do certain math problems?

There are lots of niches for non-LLM AI models in tech that aren’t “intelligent” but can do quite a good job when it comes to narrow, well-defined tasks like image recognition, for example.

Can a person could be considered intelligent if they can’t do math?

Which math? All math?

Don’t miss the forest for the trees. What I’m saying is that, unless you have an objective and well-defined definition for intelligence, it becomes impossible to classify what is or isn’t intelligent.

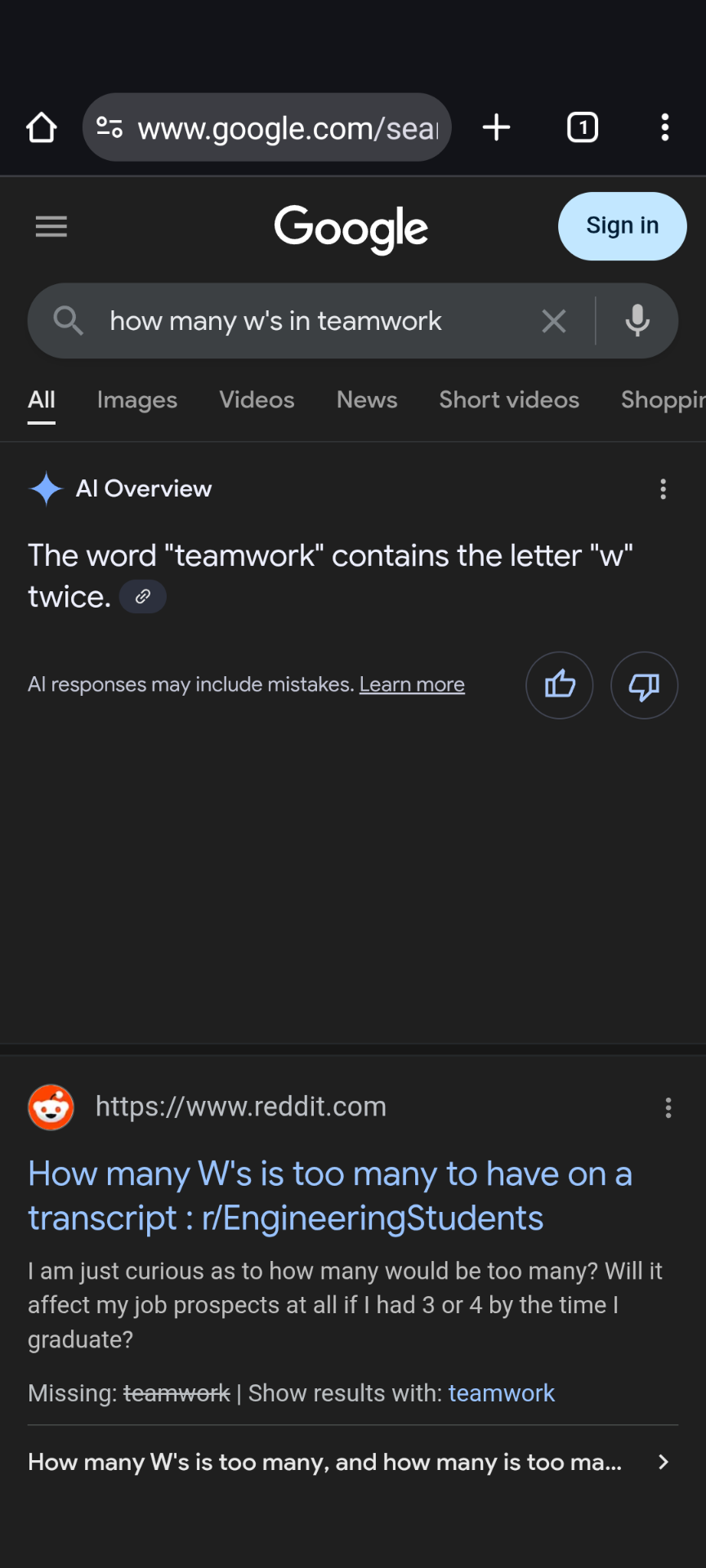

The instance i was referring to is the inability of some LLMs to count the occurrence letters in words. Case and point:

There are native English speakers who can not answer that question.

are they not intelligent?

You fail to comprehend my previous comment. Are you intelligent?

if I’m missing the point then what is the answer to my question?

There’s no generally accepted answer. That’s the point.

I assume most of the people saying LLMs are not intelligent are comparing it to intelligent life, like humans and perhaps even other animals.

I’m also referring to intelligence in that way. However, if you can think of more ways to express it then I would be open to listening.

I think something you should come away from this thread with, is that we don’t have good or agreed upon definitions of what intelligence are, which makes answering your question really difficult.

Yeah, I already have that idea. Discerning intelligence is more of a philosophical discussion and not quite the purpose of this thread.

I would like to believe that the people proudly saying “AI isn’t intelligent” could also proudly say what intelligence is, but I know that’s expecting too much of average internet users.

“AI isn’t intelligent”

“Ai isn’t intelligent” is a form of thought-terminating cliché. Its a low effort way of virtue signalling and gatekeeping. And its really bad on lemmy.

Its not a defense of shitty corporations to be interested in computation, math, machine learning, etc, but must lemmings are well : whispers :, lemmings.

Thank you so much for sharing that article with me.

I’ve had similar ideas, but no formal exposure.

I mean this is literally my other screen:

So I feel obligated to at least try and answer.

I think that it’s important to consider that language can evolve or mean different things over time. Regarding “artificial intelligence,” this is really just a renaming of machine learning algorithms. They definitely do seem “intelligent” but having intelligence and seemingly having it are two different things.

Currently, real AI, or what is being called “Artificial General Intelligence,” doesn’t exist yet.

How are you defining intelligence anyways?

How are you defining intelligence anyways?

It would actually depend on the people saying LLMs are not intelligent.

I assume they’re referring to intelligence as we see in humans and perhaps animals.

I want to say then that probably counts as intelligence, as you can converse with LLMs and have really insightful discussions with them, but I personally just can’t agree that they are “intelligent” given that they do not understand anything they say.

I’m unsure if you’ve read of the Chinese Room but Wikipedia has a good article on it

I think what you are describing is “agency” and not necessarily intelligence.

A gold fish has agency, but no amount if exposure to linear algebra will give them the ability to transpose a matrix.

What I tried to say is that if the LLM doesn’t actually understand anything it says, it’s not actually intelligent is it? Inputs get astonishingly good outputs, but it’s not real AI.

LLM doesn’t actually understand anything it says

Do you?

Do I?

Where do thoughts come from? Are you the thought or the thing experiencing the thought? Which holds the intelligence?

I know enough about thought to know that you aren’t planning the words you are about to think next, at least not with any conscious effort. I also know that people tend to not actually know what it is they are trying to say or think until they go through the process; start talking and the words flow.

Not altogether that different than next token prediction; maybe just with a network 100x as deep…

This gets really deep into how we’re all made of not alive things and atoms and yet here we are, and why is it no other planet has life like us etc. Also super philosophical!

But truly, the LLMs don’t understand things they say, and Apple apparently just put out a paper saying they don’t reason either (if you consider that to be different from understanding). They’re claiming it’s all fancy pattern recognition. (Putting link below of interested)

https://machinelearning.apple.com/research/illusion-of-thinking

Another difference between a human and an LLM is likely the ability to understand semantics within syntax, rather than just text alone.

I feel like there’s more that I want to add but I can’t quite think of how to say it so I’ll stop here.

An interesting study I recall from my neuroscience classes is that we “decide” on what to do (or in this case, what to say) slightly before we’re aware of the decision, and then our brain comes up with a story about why we made that decision so that it feels like we have agency.

Thank you. This is exactly what I was trying to discuss.

Do you think there’s anything that can change about AI to make it intelligent by your standard?

I’m of the idea that AI will eventually “emerge” from ongoing efforts to produce it, so to say if there’s anything that can “change” about current AI as people call it now is somewhat moot.

I think LLMs are a dead end to producing real AI though, but no one REALLY knows cause it just hasn’t happened yet.

Not recommending anyone buy crypto, but I’ve been following Qubic and find it really interesting. Its anyone’s guess what organization will create real AI though.