Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this.)

another cameo appearance in the TechTakes universe from George Hotz with this rich vein of sneerable material: The Demoralization is just Beginning

wowee where to even start here? this is basically just another fucking neoreactionary screed. as usual, some of the issues identified in the piece are legitimate concerns:

Wanna each start a business, pass dollars back and forth over and over again, and drive both our revenues super high? Sure, we don’t produce anything, but we have companies with high revenues and we can raise money based on those revenues…

… nothing I saw in Silicon Valley made any sense. I’m not going to go into the personal stories, but I just had an underlying assumption that the goal was growth and value production. It isn’t. It’s self licking ice cream cone scams, and any growth or value is incidental to that.

yet, when it comes to engaging with this issues, the analysis presented is completely detached from reality and void of any evidence of more than a doze seconds of thought. his vision for the future of America is not one that

kicks the can further down the road of poverty, basically embraces socialism, is stagnant, is stale, is a museum

but one that instead

attempt[s] to maintain an empire.

how you may ask?

An empire has to compete on its merits. There’s two simple steps to restore american greatness:

-

Brain drain the world. Work visas for every person who can produce more than they consume. I’m talking doubling the US population, bringing in all the factory workers, farmers, miners, engineers, literally anyone who produces value. Can we raise the average IQ of America to be higher than China?

-

Back the dollar by gold (not socially constructed crypto), and bring major crackdowns to finance to tie it to real world value. Trading is not a job. Passive income is not a thing. Instead, go produce something real and exchange it for gold.

sadly, Hotz isn’t exactly optimistic that the great american empire will be restored, for one simple reason:

[the] people haven’t been demoralized enough yet

an empire has to compete on its merits

Brain drain the world. Work visas for every person who can produce more than they consume. I’m talking doubling the US population, bringing in all the factory workers, farmers, miners, engineers, literally anyone who produces value.

Okay, I mean, that’s coherent policy, I really don’t like the caveats of “produces more than they consume” cause how do you quantify that, but yes, immigration is actually good…

Can we raise the average IQ of America to be higher than China?

aaaand it’s eugenics, fuck, how does this keep happening

-

State Dept. to use AI to revoke visas of foreign students who appear “pro-Hamas”

https://www.axios.com/2025/03/06/state-department-ai-revoke-foreign-student-visas-hamas

Odds that this catches some Israeli nationalists in the net because they were posting about Hamas and arguing with the supposed sympathizers? Given their moves to gut the bureaucracy I can’t imagine they have the manpower to have a human person review all the people this is going to flag.

New thread from Ed Zitron, focusing on the general trashfire that is CoreWeave. Jumping straight to the money-shot, he noted how the company is losing money selling shovels in the gold rush:

You want my off-the-cuff prediction, CoreWeave will probably be treated as the Leyman Brothers of the 2020s, an unofficial mascot of everything wrong with Wall Street (if not the world) during the AI bubble.

Nvidia’s current downturn is probably moreso due to the disastrous launch of it’s 5000 series rather than AI.

If it was AI I’m guessing the drop would be larger.

Ezra Klein is the biggest mark on earth. His newest podcast description starts with:

Artificial general intelligence — an A.I. system that can beat humans at almost any cognitive task — is arriving in just a couple of years. That’s what people tell me — people who work in A.I. labs, researchers who follow their work, former White House officials. A lot of these people have been calling me over the last couple of months trying to convey the urgency. This is coming during President Trump’s term, they tell me. We’re not ready.

Oh, that’s what the researchers tell you? Cool cool, no need to hedge any further than that, they’re experts after all.

I listened to ezra klein’s podcast sometimes before he moved to the NYT, thought it was occasionally interesting. . every time I’ve listened to an episode it’s been the most credulous shit I’ve ever heard

like, there was one episode where he interviewed a woman whose shtick was spending the whole time talking in what I can loosely call subtext about how she fucked an octopus. she’d go on about how they were ‘tasting each other’ and their ‘fluids were mingling’ and such and he’d just be like wow what a fascinating encounter with an alien intelligence. this went on for an hour and at no point did he seem to have a clue what was going on

the wat.jpeg

ok I looked up the transcript and these were the most spicy bits, which in my memory had come to dominate the whole episode

EDIT: her shtick does not seem to have been confined to this interview

another day volunteering at the octopus museum. everyone keeps asking me if they can fuck the octopus. buddy,

@blakestacey @sc_griffith my “No I did not fuck the octopus” t-shirt is raising a lot of questions already answered by the shirt

Has Klezra Ein ever used the term “useful idiot”?

Reminds me of this gem from Ezra a few years back about the politics of fear (buddy, you bought into the politics of fear with your support for the Iraq War)

New ultimate grift dropped, Ilya Sutskever gets $2B in VC funding, promises his company won’t release anything until ASI is achieved internally.

I’m convinced that these people have no choice but to do their next startup, especially if their names are already prominent in the press like Sutskever and Murati. Once you’re off the grift train, there is no easy way back on. I guess you can maybe sneak back in as a VC staffer or an independent board member, but that doesn’t seem quite as remunerative.

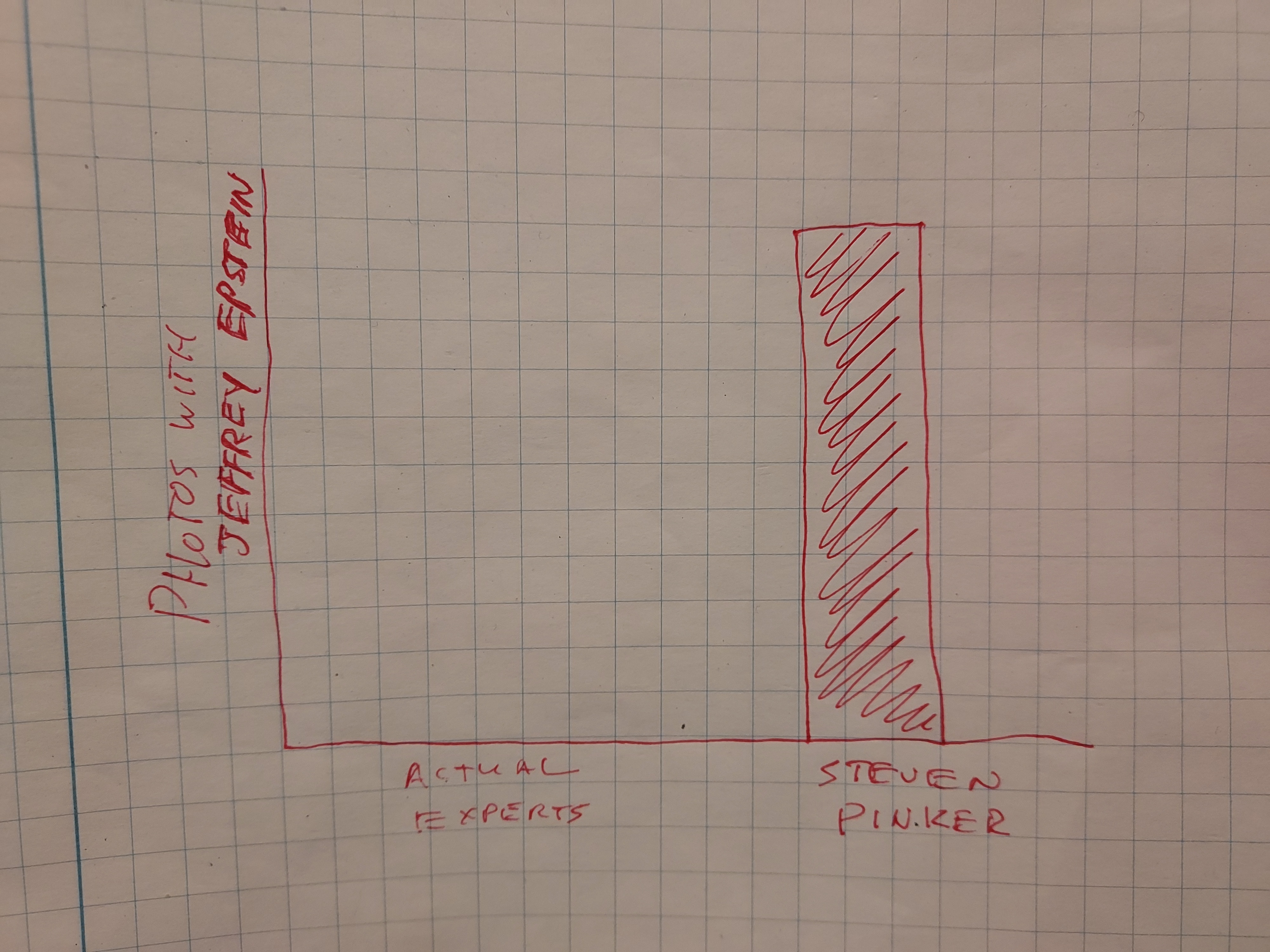

The genocide understander has logged on! Steven Pinker bluechecks thusly:

Having plotted many graphs on “war” and “genocide” in my two books on violence, I closely tracked the definitions, and it’s utterly clear that the war in Gaza is a war (e.g., the Uppsala Conflict Program, the gold standard, classifies the Gaza conflict as an “internal armed conflict,” i.e., war, not “one-sided violence,” i.e., genocide).

You guys! It’s totes not genocide if it happens during a war!!

Also, “Having plotted many graphs” lolz.

Jewish people fought back in the ghettos, nazis didnt do a genocide! Ghandi, by not fighting back tricked the Brits into doing a genocide.

What a fucked up broken classification.

E: im also reminded of the ‘armed/unarmed’ trick american racists pull, when a poc gets killed by the cops suddenly it matters a lot if a weapon shaped object was perhaps nearby. Despite this not being an executable reason for white people who get in touch with the police.

E2: also small annoyance if you track all definitions you should he able to understand that this means that for others who pick a different definition it is a genocide. Hell I can give several definitions of fascism, and if I were to pick a different definition of some group doesnt mean im correct, nor does it make the group less bad. They are still doing war crimes and ethnic cleansings (condemned by all big human rights orgs and the UN). But thanks for scientificly proving it is impossible to disappear into nothing by crawling up your own ass.

Pinker tries not to be a total caricature of himself challenge: profoundly impossible

specifically this caricature:

incredible, where is this from?

https://www.currentaffairs.org/news/2018/08/comic-steven-pinker-certified-grief-counselor

I don’t keep this link on hand, I just google “pinker comic” and find it.

It is from 2018 (also pre Nathans capitalist class consciousness reveal arc iirc) wonder what Pinker actually said during covid.

Early covid (july 2020) interview:

charitable take: he has the good sense to not make any major claims about the pandemic, other than to speculate that it would worsen his go-to metric of “extreme poverty”, which is… fine, i guess.

neutral take: nothing extraordinarily damning other than his usual takes.

uncharitable: this is of course early pandemic, so maybe pinker hasn’t found the angle to sell his normal shit with.

Side note: does pinker ever wonder about why extreme poverty has been reduced? I can’t imagine he thinks it’s anything other than liberal democracy and US foreign interference, when the real answer is like, China (a country that Pinker definitely believes is counter to said liberal democracy and the US) continuing to develop economically. Basically i want to him to squirm as he tries and fails to resolve the cognitive dissonance.

google stepping up their quest to disorganize the world’s information

what if, right, we made a search engine so good it became the verb for searching, and then we replaced it with a robot with a concussion

occasionally mentioned here Jan Marsalek implicated in surveillance (sometimes comically bad) and planned murder of journalists who crossed him https://theins.press/en/inv/279034 https://www.euronews.com/2025/03/07/uk-court-convicts-three-bulgarians-of-spying-for-russia

I was discussing some miserable AI related gig work I tried out with my therapist. doomerism came up and I was forced to explain rationalists to him. from now on I would prefer that all topics I have ever talked to any of you about be irrelevant relevant to my therapy sessions

Fellas, 2023 called. Dan (and Eric Schmidt wtf, Sinophobia this man down bad) has gifted us with a new paper and let me assure you, bombing the data centers is very much back on the table.

"Superintelligence is destabilizing. If China were on the cusp of building it first, Russia or the US would not sit idly by—they’d potentially threaten cyberattacks to deter its creation.

@ericschmidt @alexandr_wang and I propose a new strategy for superintelligence. 🧵

Some have called for a U.S. AI Manhattan Project to build superintelligence, but this would cause severe escalation. States like China would notice—and strongly deter—any destabilizing AI project that threatens their survival, just as how a nuclear program can provoke sabotage. This deterrence regime has similarities to nuclear mutual assured destruction (MAD). We call a regime where states are deterred from destabilizing AI projects Mutual Assured AI Malfunction (MAIM), which could provide strategic stability. Cold War policy involved deterrence, containment, nonproliferation of fissile material to rogue actors. Similarly, to address AI’s problems (below), we propose a strategy of deterrence (MAIM), competitiveness, and nonproliferation of weaponizable AI capabilities to rogue actors. Competitiveness: China may invade Taiwan this decade. Taiwan produces the West’s cutting-edge AI chips, making an invasion catastrophic for AI competitiveness. Securing AI chip supply chains and domestic manufacturing is critical. Nonproliferation: Superpowers have a shared interest to deny catastrophic AI capabilities to non-state actors—a rogue actor unleashing an engineered pandemic with AI is in no one’s interest. States can limit rogue actor capabilities by tracking AI chips and preventing smuggling. “Doomers” think catastrophe is a foregone conclusion. “Ostriches” bury their heads in the sand and hope AI will sort itself out. In the nuclear age, neither fatalism nor denial made sense. Instead, “risk-conscious” actions affect whether we will have bad or good outcomes."

Dan literally believed 2 years ago that we should have strict thresholds on model training over a certain size lest big LLM would spawn super intelligence (thresholds we have since well passed, somehow we are not paper clip soup yet). If all it takes to make super-duper AI is a big data center, then how the hell can you have mutually assured destruction like scenarios? You literally cannot tell what they are doing in a data center from the outside (maybe a building is using a lot of energy, but not like you can say, “oh they are running they are about to run superintelligence.exe, sabotage the training run” ) MAD “works” because it’s obvious the nukes are flying from satellites. If the deepseek team is building skynet in their attic for 200 bucks, this shit makes no sense. Ofc, this also assumes one side will have a technology advantage, which is the opposite of what we’ve seen. The code to make these models is a few hundred lines! There is no moat! Very dumb, do not show this to the orangutan and muskrat. Oh wait! Dan is Musky’s personal AI safety employee, so I assume this will soon be the official policy of the US.

link to bs: https://xcancel.com/DanHendrycks/status/1897308828284412226#m

I guess now that USAID is being defunded and the government has turned off their anti-russia/china propaganda machine, private industry is taking over the US hegemony psyop game. Efficient!!!

/s /s /s I hate it all

Mutual Assured AI Malfunction (MAIM)

The proper acronym should be M’AAM. And instead of a ‘roman salut’ they can tip their fedora as a distinctive sign 🤷♂️

New piece from Brian Merchant, focusing on Musk’s double-tapping of 18F. In lieu of going deep into the article, here’s my personal sidenote:

I’ve touched on this before, but I fully expect that the coming years will deal a massive blow to tech’s public image, expecting them to be viewed as “incompetent fools at best and unrepentant fascists at worst” - and with the wanton carnage DOGE is causing (and indirectly crediting to AI), I expect Musk’s governmental antics will deal plenty of damage on its own.

18F’s demise in particular will probably also deal a blow on its own - 18F was “a diverse team staffed by people of color and LGBTQ workers, and publicly pushed for humane and inclusive policies”, as Merchant put it, and its demise will likely be seen as another sign of tech revealing its nature as a Nazi bar.

Might be something interesting here, assuming you can get past th paywall (which I currently can’t): https://www.wsj.com/finance/investing/abs-crashed-the-economy-in-2008-now-theyre-back-and-bigger-than-ever-973d5d24

Today’s magic economy-ending words are “data centre asset-backed securities” :

Wall Street is once again creating and selling securities backed by everything—the more creative the better…Data-center bonds are backed by lease payments from companies that rent out computing capacity

@rook @blakestacey here’s an archive Link https://archive.ph/h6fbA

this archive has it without paywall

Robert Evans on Ziz and Rationalism:

https://bsky.app/profile/iwriteok.bsky.social/post/3ljmhpfdoic2h

https://bsky.app/profile/iwriteok.bsky.social/post/3ljmkrpraxk2h

J. Oliver Conroy’s Ziz piece is out. Not odious at a glance.

Yudkowsky was trying to teach people how to think better – by guarding against their cognitive biases, being rigorous in their assumptions and being willing to change their thinking.

No he wasn’t.

In 2010 he started publishing Harry Potter and the Methods of Rationality, a 662,000-word fan fiction that turned the original books on their head. In it, instead of a childhood as a miserable orphan, Harry was raised by an Oxford professor of biochemistry and knows science as well as magic

No, Hariezer Yudotter does not know science. He regurgitates the partial understanding and the outright misconceptions of his creator, who has read books but never had to pass an exam.

Her personal philosophy also draws heavily on a branch of thought called “decision theory”, which forms the intellectual spine of Miri’s research on AI risk.

This presumes that MIRI’s “research on AI risk” actually exists, i.e., that their pitiful output can be called “research” in a meaningful sense.

“Ziz didn’t do the things she did because of decision theory,” a prominent rationalist told me. She used it “as a prop and a pretext, to justify a bunch of extreme conclusions she was reaching for regardless”.

“Excuse me, Pot? Kettle is on line two.”

It goes without saying that the AI-risk and rationalist communities are not morally responsible for the Zizians any more than any movement is accountable for a deranged fringe.

When the mainstream of the movement is ve zhould chust bomb all datacenters, maaaaaybe they are?

Ziz helpfully suggested I use a gun with a potato as a makeshift suppressor, and that I might destroy the body with lye

I looked up a video of someone trying to use a potato as a suppressor and was not disappointed.

you undersold this

that guy’s face, amazing

if this is peak rationalist gunsmithing, i wonder how their peak chemical engineering looks like

the body is placed in a pressure vessel which is then filled with a mixture of water and potassium hydroxide, and heated to a temperature of around 160 °C (320 °F) at an elevated pressure which precludes boiling.

Also, lower temperatures (98 °C (208 °F)) and pressures may be used such that the process takes a leisurely 14 to 16 hours.

Well that sounds like a great way to either make a very messy explosion or have your house smell like you’re disposing of a corpse from a mile away.

That’s what we call a win-win scenario

I’m fairly sure that a 50 gallon drum of lye at room temperature will take care of a body in a week or two. Not really suited to volume "production”, which is what water cremation businesses need.

as a rule of thumb, everything else equal, every increase in temperature 10C reaction rates go up 2x or 3x, so it would be anywhere between 250x and 6500x longer. (4 months to 10 years??) but everything else really doesn’t stay equal here, because there are things like lower solubility of something that now coats something else and prevents reaction, fat melting, proteins denaturing thermally, lack of stirring from convection and boiling,

it will also reek of ammonia the entire time

I feel like it still starts off too credulous towards the rationalists, but it’s still an informative read.

Around this time, Ziz and Danielson dreamed up a project they called “the rationalist fleet”. It would be a radical expansion of their experimental life on the water, with a floating hostel as a mothership.

Between them, Scientology and the libertarians, what the fuck is it with these people and boats?

a really big boat is the ultimate compound. escape even the surly bonds of earth!

What we really need to do is lash together a bunch of icebergs…

I assume its to get them to cooperate.

Ah, yes. The implication.

…what the fuck is it with these people and boats?

I blame the British for setting a bad example

Hey, we’re an island nation which ruled over a globe-spanning empire, we had a damn good reason to be obsessed with boats.

Couldn’t exactly commit atrocities on a worldwide scale without 'em, after all.

Starting things off here with a sneer thread from Baldur Bjarnason:

Keeping up a personal schtick of mine, here’s a random prediction:

If the arts/humanities gain a significant degree of respect in the wake of the AI bubble, it will almost certainly gain that respect at the expense of STEM’s public image.

Focusing on the arts specifically, the rise of generative AI and the resultant slop-nami has likely produced an image of programmers/software engineers as inherently incapable of making or understanding art, given AI slop’s soulless nature and inhumanly poor quality, if not outright hostile to art/artists thanks to gen-AI’s use in killing artists’ jobs and livelihoods.